Bayesian Statistics

7.4 Comparison to classical methods

You will have seen a different method of carrying out a hypothesis test before, looking something like this.

-

Definition 7.4.1 The classical hypothesis test is the following procedure:

-

1. Choose a model family \((M_\theta )_{\theta \in \Pi }\), choose a value \(\theta _0\in \Pi \) and define \(H_0\) to be the model \(M_{\theta _0}\). This is often written in shorthand as \(H_0:\theta =\theta _0\).

-

2. Calculate a value \(p\) as follows. Assume that \(H_0\) is true i.e. use the model \(M_{\theta _0}\) and using this model, calculate \(p\) to be the probability of observing data that is (in some chosen sense) ‘at least as extreme’ as the data \(x\) that we actually observed.

If \(p\) is sufficiently small (in some chosen sense) then reject \(H_0\).

-

There is no need for an ‘alternative hypothesis’ here. More specifically, rejecting \(H_0\) means that we think it is unlikely that our chosen model \(M_{\theta _0}\) would generate the data \(x\), so consequently we think it is unlikely that \(M_{\theta _0}\) is a good model.

There is nothing else to say here! Rejecting \(H_0\) does not mean that the ‘alternative hypothesis’ \(H_1\) that \(\theta \neq \theta _0\) is accepted (or true). If \(p\) turns out to be small it means that either (i) \(M_{\theta _0}\) is a good model and our data \(x\) was unlikely to have occurred or, (ii) \(M_{\theta _0}\) is a bad model for our data. Neither statement tells us what a good model might look like. Unfortunately hypothesis testing is very often misunderstood, and rejection of \(H_0\) is incorrectly treated as though it implies that \(H_1\) is true.

If we do not reject \(H_0\), then it means that the model \(M_{\theta _0}\) is reasonably likely to generate the data we have. This leaves open the possibility that there may be lots of other models, not necessarily within our chosen model family, that are also reasonably likely to generate the data we have. This point is sometimes misunderstood too.

-

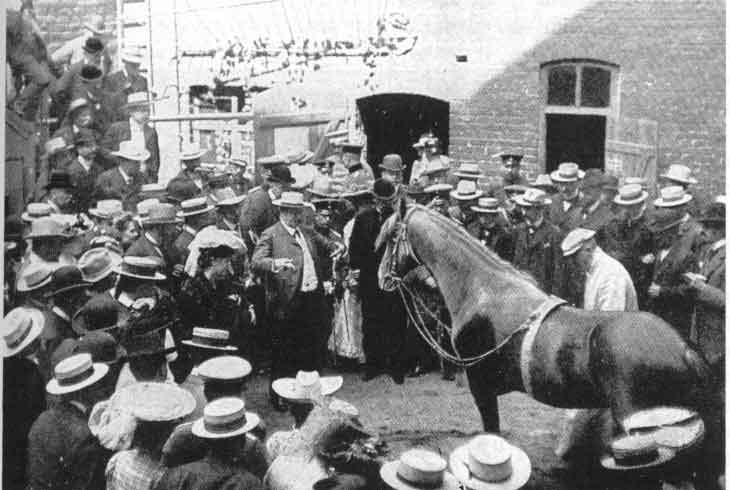

Example 7.4.2 A famous example of these mistakes comes from the ‘clever Hans’ effect. Hans was a horse who appeared to be able to do arithmetic, owned by a mathematics teacher Wilhelm von Osten. Von Osten would ask Hans (by speaking out loud) to answer to various questions and Hans would reply by tapping his hoof. The number of taps was interpreted as a numerical answer. Hans answered the vast majority of questions correctly.

To construct a hypothesis test using Definition 7.4.1, take a model family \(M_{\theta }\sim \Bern (\theta )^{\otimes n}\), where the data \(x=(x_1,\ldots ,x_n)\) corresponds to \(x_i=1\) for solving the \(n^{\text {th}}\) question correctly, and \(x_i=0\) for incorrectly. We don’t know exactly how hard the arithmetic questions were, so let us suppose that the probability of Hans solving a question correctly by guessing at random is \(\theta =\frac 12\) (this is clearly a very generous assumption for arithmetic). So, take

\[H_0:\text { that }\theta =1/2\times (0,\infty )\text { i.e.~the horse solves the questions at random}\]

and then the model we wish to test is \(M_{\frac 12}\). The horse is asked \(n=10\) questions, and it answers them all correctly. Our model \(M_{\frac 12}\) says the probability of this is \((\frac 12)^{10}\approx 0.001=p\). We reject \(H_0\). Taking any value \(\theta \leq \frac 12\) will lead to the same conclusion.

So, we expect that our model \(M_{\theta }\) is a bad description of reality, for each \(\theta \leq \frac 12\). This does not mean that we must accept \(H_1\) and believe the horse is doing arithmetic i.e. that some alternative model \(M_\theta \) is correct for some larger value of \(\theta \). In fact, what is going on here is that Hans has learnt to read the body language of Wilhelm von Osten, who leans in forwards whilst Hans is tapping his hoof and leans back upright as soon as the correct number of taps has been reached. This was established by the psychologist Oskar Pfungst, who tested Hans and von Osten under several different conditions in a laboratory.

In short, our model that the horse ‘solves’ questions is a bad choice. The horse answers questions correctly but it does not solve questions. To distinguish between these two situations we need a better model than \((M_\theta )\), as Pfungst did in his laboratory. His model included (amongst other things) an extra variable for whether Hans could see von Osten.

After the investigations by Pfungst were done, von Osten refused to believe what Pfungst had discovered, and continued to show Hans around Germany. They attracted large and enthusiastic crowds, and made a substantial amount of money from doing so – many in his audience wondered if they should accept \(H_1\).

The point of including this example in our course – in which we focus on Bayesian methods – is to note that errors in interpretation are less common when using Bayesian approaches. The reason is simply that the Bayesian approach uses the framework of conditional probability, so we state our results in terms of conditional probabilities and odds ratios. This makes our assumptions and conclusions clear.

By contrast, the \(p\)-value from Definition 7.4.1 is not a conditional probability because conditioning the model \((M_\theta )\) on the event \(\{\Theta =\theta _0\}\) is only possible if we have a random variable \(\Theta \) that we can condition on the event \(\{\Theta =\theta _0\}\), and Definition 7.4.1 does not include this random variable. However, if we take \(\Theta \) to be a uniform prior and \((M_\theta )\) is well-behaved enough (e.g. Assumption 1.3.2) then \(M_\Theta |_{\{\Theta =\theta _0\}}\eqd M_{\theta _0}\) can be shown. In that situation the \(p\)-value is the conditional probability, given \(\{\Theta =\theta _0\}\), of observing data that is (in some chosen sense) at least as extreme as what we did observe. Calculating \(p\) is often difficult and instead it is common to use approximation theorems. These theorems2 tend to contain complicated assumptions that are difficult to state correctly, particularly when data points may not be fully independent of each other. The combined result is that Definition 7.4.1 gives a procedure with many potential sources of error.

-

Remark 7.4.3 I do not mean to imply that using the Bayesian framework would certainly have avoided making the mistake detailed in Example 7.4.2. Only that, because the framework would make us state our assumptions and conclusions more clearly, we can then more easily question which of our assumptions was incorrect.

When a horse claims to do arithmetic we are naturally suspicious. In more subtle situations it is harder to find mistakes.

Similar considerations apply to the comparison between HPDs and confidence intervals. For example, a \(95\%\) confidence \([a,b]\) intervals is often incorrectly treated as a statement that the ‘event’ \(\theta \in [a,b]\) has \(95\%\) probability. An HPD actually is a statement to that effect, about the posterior distribution \(\Theta |_{\{X=x\}}\), which makes it much easier to interpret.

Figure 7.1: Hans the horse in 1904, correctly answering arithmetic questions set by his owner Wilhem von Osten.